You should be able to ask questions of your learning data, and the answers should be able to tell you a story—such as what training content is most effective, who are your most active learners, or how are people applying what they learned.

So when there’s little to no change in your data, you should ask yourself: Why isn’t there a change? In this blog post, we explore why no change in your data might be more meaningful than you realize.

What are common reasons for little to no change in learning data?

No change could mean different things in different contexts, but will usually indicate a continuation. For example:

- Learner engagement levels continue at their current levels.

- Assessment scores continue to stay in the same range.

- Sales people continue to sell at the same levels after training.

That continuation, however, doesn’t always mean things are “sitting still.” You might see a continuation in some rate of change, such as growth in platform adoption. In this case, the user count is growing (because more are adopting), but the rate of adoption isn’t going up or down.

Either way, observing continuation often indicates that other metrics that have changed did not have much impact on the target metric you’re watching.

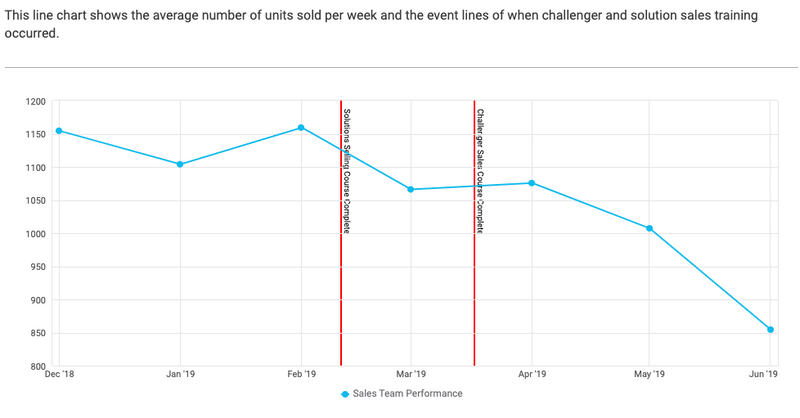

For instance, consider sales results continuing at their current levels after a major training initiative. In this case, “number of salespeople who completed the new training” changed, while “total sales closed” did not change—indicating the new training may not have had any impact on total sales closed.

Recommended Reading

When is no change in data more intriguing than change?

In hypothesis testing, no change is often as intriguing as change. Regardless, either outcome will help to conclude an experiment and provide a new piece of information.

In a world of opportunity cost, avoiding efforts that don’t have an impact is a necessary part of maximizing efforts that do have an impact. Knowing what efforts, costs, or variables don’t work will get you one step closer toward understanding those that do, or free up resources for those that do.

No change might also reveal issues with your data collection and measurement. Perhaps your test or measurement isn’t sensitive enough, or you’re not capturing the information you think you are.

No change might also represent success in some cases. Perhaps you’re worried a success metric will suffer due to a cost-saving change you’re implementing. In this case, observing no change in the result metric could be an indication that you’ve implemented the right cost-savings plan.

Recommended Reading

So how do you determine if these data issues exist in your organization?

There are really two ways to study relationships, or lack of relationships, that are represented through data—experimental studies and correlational studies. Since experimental studies tend to be controlled by the researcher, it’s likely that only correlational studies can be done on historical data.

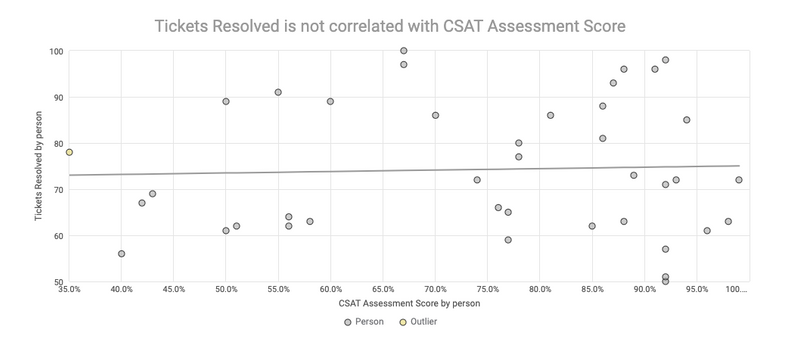

In this case, looking at correlations of different variables can reveal where:

- changes in data are related to others, or

- expected variables appear to be uncorrelated with the result metric.

The latter category can include a surprising number of factors, which can be quite important in the search for scrap learning and other cost-saving opportunities.

For example, if looking for a correlation between training and sales results, you might end up looking at a very wide set of individual courses and curriculum, with even the most fundamental pieces of training showing no correlation.

Recommended Reading

How do you resolve these data issues? Even better, how do you prevent them?

It depends on the context of “no change.” In some cases, resolving issues could mean ending an expensive license for learning content or learning initiative that has no observed relationship with results. Or, resolving could mean:

- fixing the way data is captured or measured if there are errors in the collection and observation process, or

- tuning the sensitivity and expression of a particular measurement.

If you aren’t finding any meaningful relationships or changes in correlational studies, the resolution may be to adopt a more direct experimental approach that involves design and testing. This can also be the clearest way to get beyond correlation and actually establish causation.

Recommended Reading

How do you explain these issues to stakeholders and leadership?

Carefully! Deliberately.

You should avoid presenting results as conclusive. For example, if your correlational studies show no relationship between a particular learning initiative and the success metric in question, you don’t want to announce “our training programs are completely ineffective.”

First, not being able to prove something is different than disproving something. Second and just as important, your audience is almost certain to have stakeholders who are invested in the programs in question and may become defensive against the idea that they have wasted their efforts and resources.

Instead, attempt to present data in a way that invites the audience to reach their own logical conclusions. Or better yet, ask new questions that could shed more light on the results. Data analysis is fundamentally powered by asking questions, and generating more questions is like adding fuel to the engine of discovering insights.

When there’s not a good answer, how can you change the question you’re asking of your data?

Recommended Reading

It’s important to consider what assumptions might be implied in your question itself. If you are searching data for strong correlation coefficients among variables, it’s important to realize you’re fundamentally assuming that relationships of interest will be linear in nature.

For example, studies of anxiety vs. performance show a curvilinear relationship, such that performance is initially improved by anxiety, and then falls as anxiety climbs. A correlational study using linear regression won’t find that this is such an important insight.

You might also find that your question should incorporate more of a time-specific element. For example, imagine that completing a sales training program has a strong effect on a salesperson’s closed sales—but only for the three weeks following the program, after which the effect “wears off.”

If you only do a broad study of the relationship between completion of the program and total sales, you may find no relationship because the important temporal effect is just a small portion of the overall time period included per person in your study.

Finally, seeing no change could also indicate that you are missing some important data. If your perception of reality—and anecdotal evidence—suggest one thing, but the data doesn’t seem to reveal it, the data you’re using could be “blind” to some element or phenomena you are observing outside of the data.

An inquiry into your own perception of the situation might also reveal new data points you should be collecting.

What assumptions are embedded in your questions?

The changes you’re looking for—and the questions you’re asking—are already narrowing the scope of what data you’ll be examining. So, expanding the set of questions, and the scope of questions, can create more opportunities for surprises.

Let the questions lead the way

Not asking the right questions could mean overlooking something really important or getting the wrong information altogether.

Remember to back into the questions and studies starting from “what’s important.” Start with things that could be important discoveries, or high-level questions you know will have an impact for stakeholders. And then use those to further break down more specific questions that might lead you on that trail.

About the author

David Ells takes great pride in leading a dynamic team and turning innovative ideas into reality. His passion for technology and development found its roots at Rustici Software. During his tenure, he contributed significantly to the creation of SCORM Cloud, a groundbreaking product in the eLearning industry, and led the development of the world’s first learning record store powered by xAPI.

Subscribe to our blog